BakeCamera

Overview

Baking in Moonray is accomplished through the use of a BakeCamera camera shader. The BakeCamera, like any other camera shader, is responsible for turning sample locations on the image plane into primary rays (ray origin and ray direction). For each pixel location (px, py) in the image being rendered a (u, v) coordinate is computed as:

u = px / (image_width - 1)

v = py / (image_height - 1)

Once this (u, v) coordinate is computed, the corresponding 3D location, P, on the geometry being baked is looked up. Any normal supplied with the mesh, N, is also available. In order to do this, the geometry being baked must have a properly uvunwrapped parameterization. This is just a fancy way of saying that a given (u, v) coordinate must map to at most one point location on the geometry’s surface. The BakeCamera does not check for this condition, it assumes it.

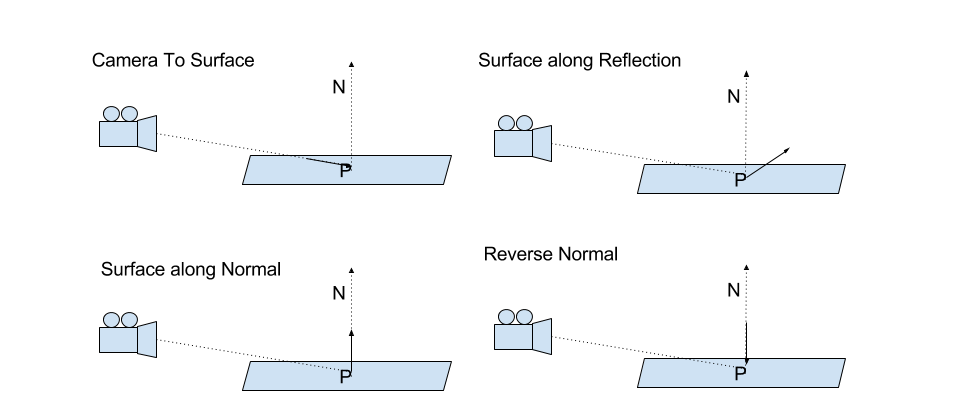

Once the 3D location is known, the primary ray origin and direction can be chosen according to one of four modes:

0: camera to surface : The ray direction is chosen as the direction between the location of the bake camera and the 3D surface point. The ray origin is chosen to be just slightly offset fromPback along this ray.1: surface along normal : The ray direction is the surface normal, the ray origin is offset just above the surface along the normal direction.2: surface along reflection vector : The ray direction is the reflection vector defined by the bake camera’s location and the surface normal. The ray origin is the surface location, offset slightly along the reflection vector direction.3: reverse normal : The ray direction is the negative normal direction. The ray origin is offset just slightly above the surface.

Once the primary ray has been defined, there is nothing left that is specific to baking. All features of Moonray rendering are available, including AOVs. There are some features you should avoid though. Motion-blur and depth of field are not implemented in the BakeCamera. So turning those on could produce undesirable or unexpected results.

The following default camera attributes cannot be used or are ignored during baking:

- near and far for modes 0 (from camera to surface) and 3 (reverse normal). The near and far clipping planes are computed automatically based on the bias parameter to ensure that the position is baked in optimally inside these two clipping planes.

- mb parameters (motion blur should not be used with the BakeCamera)

Attribute Reference

Frustum attributes

far

Float

default: 10000.0

Far clipping plane

near

Float

default: 1.0

Near clipping plane

Medium attributes

medium_geometry

SceneObject

default: None

The geometry the camera is 'inside' to which you'd like the medium_material applied. (The use case for this is typically partially-submerged cameras)

medium_material

SceneObject

default: None

The material the camera is 'inside'. If no medium_geometry is specified, ALL rays will have this initial index of refraction applied.

Motion Blur attributes

mb_shutter_bias

Float

default: 0.0

Biases the motion blur samples toward one end of the shutter interval.

mb_shutter_close

Float

default: 0.25

Frame at which the shutter closes, i.e., the end of the motion blur interval.

mb_shutter_open

Float

default: -0.25

Frame at which the shutter opens, i.e., the beginning of the motion blur interval.

Render Masks attributes

pixel_sample_map

String

default:

Map indicating the number of pixel samples that should be used per pixel (in uniform sampling mode). This is a multiplier on the global pixel sample count specified in SceneVariables. If the provided map has incompatible dimensions, it will be resized.

General attributes

bias

Float

default: 0.003

Ray-tracing offset for primary ray origin

geometry

Geometry

default: None

The geometry object to bake

map_factor

Float

default: 1.0

Increase or decrease the internal position map buffer resolution

mode

Int enum

0 = “from camera to surface”

1 = “from surface along normal”

2 = “from surface along reflection vector”

3 = “above surface reverse normal” (default)

How to generate primary rays

node_xform

Mat4d blurrable

default: [ [ 1, 0, 0, 0 ], [ 0, 1, 0, 0 ], [ 0, 0, 1, 0 ], [ 0, 0, 0, 1 ] ]

The 4x4 matrix describing the transformation from local space to world space.

normal_map

String filename

default:

Use this option to supply your own normals that are used when computing ray directions. Without this option, normals are computed from the geometry and do not take into account any material applied normal mapping.

normal_map_space

Int enum

0 = “camera space” (default)

1 = “tangent space”

Use camera space if you generated per frame normal maps in a pre-pass using the normal material aov. You probably want to use tangent space if you are using a normal map that is also used in the surfacing setup.

udim

Int

default: 1001

Udim tile to bake

use_relative_bias

Bool

default: True

If true, bias is scaled based on position magnitude

uv_attribute

String

default:

Specifies a Vec2f primitive attribute to use as the uv coordinates. If empty, the default uv for the mesh is used. The uvs must provide a unique parameterization of the mesh, i.e. a given (u, v) can appear only once on the mesh being baked.

Examples

Basic

local key = EnvLight("/Scene/lighting/key") { ... }

local lightSet = LightSet("/Scene/lighting/lightSet") {

key,

}

geoms = {}

assignments = {}

local checkerMap = ImageMap("/Scene/surfacing/checkerMap") { ... }

local planeMtl = BaseMaterial("/Scene/surfacing/planeMtl") {

["diffuse color"] = bind(checkerMap),

}

local planeGeom = RdlMeshGeometry("/Scene/geometry/planeGeom") { ... }

local triGeom = RdlMeshGeometry("/Scene/geometry/triGeom") { ... }

table.insert(geoms, planeGeom)

table.insert(geoms, triGeom)

table.insert(assignments, {planeGeom, "", planeMtl, lightSet})

table.insert(assignments, {triGeom, "", planeMtl, lightSet})

GeometrySet("Scene/geometrySet")(geoms)

Layer("/Scene/layer")(assignments)

-------------------------------------------------------

BakeCamera("/Scene/rendering/camera") {

["node xform"] = translate(0, .75, 3),

["geometry"] = planeGeom,

["mode"] = 0 ,-- camera

["near"] = .0001,

["far"] = 1,

}

Baking along a normal

local key = EnvLight("/Scene/lighting/key") { ... }

local lightSet = LightSet("/Scene/lighting/lightSet") {

key,

}

geoms = {}

assignments = {}

local sphereMtl = BaseMaterial("/Scene/surfacing/sphereMtl") {

["specular roughness"] = 0,

["diffuse factor"] = 0,

}

local sphereGeom = MmGeometry("/Scene/geometry/sphere") { ... }

table.insert(geoms, sphereGeom)

table.insert(assignments, {sphereGeom, "", sphereMtl, lightSet})

BakeCamera("/Scene/rendering/camera") {

["node xform"] = translate(0, .75, 3),

["geometry"] = sphereGeom,

["mode"] = 1, -- normals

["bias"] = .001

}

GeometrySet("Scene/geometrySet")(geoms)

Layer("/Scene/layer")(assignments)

Generating a Normal Map

This example is similar to the previous example, except that the surfacing of the sphere contains a normal map. In order to take these normals into account, we can run a pre-pass that generates the normal map and then a second pass that generates a bake map along these normals.

-- oiiotool sphere_normals.exr -ch "R=normal.x,G=normal.y,B=normal.z" -o sphere_normals_rgb.exr

-- maketx sphere_normals_rgb.exr --format exr -d half --nchannels 3 --oiio --wrap periodic --compression zip -o sphere_normals.tx

-- now sphere_normals.tx can be used as an input to our BakeCamera in the 2nd pass.

local key = EnvLight("/Scene/lighting/key") { ... }

local lightSet = LightSet("/Scene/lighting/lightSet") {

key,

}

geoms = {}

assignments = {}

local normalMap = ImageMap("/normalMap") {

["texture"] = ...,

["wrap around"] = true

}

local sphereMtl = BaseMaterial("/Scene/surfacing/sphereMtl") {

["specular roughness"] = 0,

["diffuse factor"] = 0,

["input normal"] = bind(ImageMap("/normalMap")),

["input normal dial"] = 1.0

}

local sphereGeom = MmGeometry("/Scene/geometry/sphere") { ... }

table.insert(geoms, sphereGeom)

table.insert(assignments, {sphereGeom, "", sphereMtl, lightSet})

BakeCamera("/Scene/rendering/camera") {

["node xform"] = translate(0, .75, 3),

["geometry"] = sphereGeom,

["mode"] = 3, -- -N

["bias"] = .001,

["near"] = .0001,

["far"] = 1,

}

GeometrySet("Scene/geometrySet")(geoms)

Layer("/Scene/layer")(assignments)

RenderOutput("/Normals") {

["file name"] = "sphere_normals.exr",

["result"] = 7, -- material aov

["material aov"] = "normal"

}

Once we have generated sphere_normals.tx we can use that texture map as an explicit input to the BakeCamera.

local key = EnvLight("/Scene/lighting/key") { ... }

local lightSet = LightSet("/Scene/lighting/lightSet") {

key,

}

geoms = {}

assignments = {}

local normalMap = ImageMap("/normalMap") {

["texture"] = ...,

["wrap around"] = true

}

local sphereMtl = BaseMaterial("/Scene/surfacing/sphereMtl") {

["specular roughness"] = 0,

["diffuse factor"] = 0,

["input normal"] = bind(ImageMap("/normalMap")),

["input normal dial"] = 1.0

}

local sphereGeom = MmGeometry("/Scene/geometry/sphere") { ... }

table.insert(geoms, sphereGeom)

table.insert(assignments, {sphereGeom, "", sphereMtl, lightSet})

BakeCamera("/Scene/rendering/camera") {

["node xform"] = translate(0, .75, 3),

["geometry"] = sphereGeom,

["mode"] = 1, -- normal

["bias"] = .001,

["normal map"] = "sphere_normals.tx"

}

GeometrySet("Scene/geometrySet")(geoms)

Layer("/Scene/layer")(assignments)

Using an Existing Normal Map

The baking step in the previous example will be inefficient if the normals can be supplied from an existing normal map. Typically these are provided in tangent space.

BakeCamera("/Scene/rendering/camera") {

["node xform"] = translate(0, .75, 3),

["geometry"] = sphereGeom,

["mode"] = 1, -- normal

["bias"] = .001,

["normal map"] = "path_to_existing_normal_map.exr",

["normal map space"] = 1 -- tangent space

}

UDIMs

local key = EnvLight("/Scene/lighting/key") { ... }

local lightSet = LightSet("/Scene/lighting/lightSet") {

key,

}

geoms = {}

assignments = {}

local checkerMap = ImageMap("/Scene/surfacing/checkerMap") {

["texture"] = "..._<UDIM>.exr",

["wrap around"] = false,

}

local checkerMtl = BaseMaterial("/Scene/surfacing/checkerMtl") {

["diffuse color"] = bind(checkerMap),

}

local planeGeom = RdlMeshGeometry("/Scene/geometry/planeGeom") { ... }

local triGeom = RdlMeshGeometry("/Scene/geometry/triGeom") { ... }

table.insert(geoms, planeGeom)

table.insert(geoms, triGeom)

table.insert(assignments, {planeGeom, "", checkerMtl, lightSet})

table.insert(assignments, {triGeom, "", checkerMtl, lightSet})

GeometrySet("Scene/geometrySet")(geoms)

Layer("/Scene/layer")(assignments)

BakeCamera("/Scene/rendering/camera") {

["node xform"] = translate(0, .75, 3),

["geometry"] = planeGeom,

-- must be set via -rdla-set udim_to_bake value

["udim"] = udim_to_bake,

["near"] = .0001,

["far"] = 1,

}